AI Bias: An Investigation of Gender, Race, and Age Biases through Image Generation

AI Bias: An Investigation of Gender, Race, and Age Biases through Image Generation

Michelle Aguilera, Shahid Yassim, Carlos Luna

Writing for Engineering 21007

Professor Pamela Stemberg

The City College of New York

March 30, 2024

ABSTRACT

The following study was designed to establish whether or not AI generated images had any bias behind the images the AI uses to represent gender neutral occupations. With AI based tools being used more often, students might use such tools for assignments, which does concern the education system, but this study is to merely check if the data gathered by AI should be reliable to get at least some sort of inspiration for tasks given to students. Teachers would never accept a paper written by AI, but does the data have any use? Each member performing had to generate 100 images each from the same AI-powered image-generation tool then organized the images by race, age and gender based on what each member can get out of the images. After gathering the data of all images, the results and research was compared with the average based on statistics and demographics of each respective gender neutral occupation. The results and comparison were discussed accordingly.

INTRODUCTION

The brain is a complex organ with neural networks that allow human beings to perform seemingly basic tasks such as learning, decision-making, displaying emotion, reasoning, and speech and pattern recognition. Over the past few decades, scientists have been trying to replicate these cognitive brain functions through artificial intelligence. Artificial intelligence is a branch of computer science that involves using computers to simulate human processes by feeding its algorithm large sets of data.

Artificial intelligence has only been progressing since its invention and it continues to do so. Its applications have been far and wide and it is being implemented in areas where we once thought tasks could only be accomplished through human involvement and intelligence. Real-world examples of this include: automating tasks such as self-driving vehicles, virtual assistants such as Alexa or Siri, diagnosing medical patients, detecting fraud, and generators such as Chat GPT or Craiyon.

However, artificial intelligence is still in its infancy and AI generators, such as the previously mentioned Chat GPT and Craiyon, will generate texts and images that aren’t reflective of reality.

In the study, “A Comprehensive Study on Bias in Artificial Intelligence Systems: Biased or Unbiased AI, That’s the Question!”, the author, Elif Kartal, views several factors as the cause of bias in algorithms. Kartal believes bias within artificial intelligence exists due to bias in data collection, bias in data set creation, and bias in preprocessing. Bias in data collection, for example, is due to human error, “This can be due to the researcher’s measurement error while collecting data or the data collection tools used in the measurement, such as sensors, cameras, surveys, and scales” (Kartal, 2022, Factors that Lead to Bias) and human biases that show in self-answered questions such as surveys, “In cases where data is collected by gathering subjective data from people, such as surveys and interviews, some preconceptions, (including historical bias, racial bias, social-class bias, and so on) from the past will be indirectly transferred to the AI system’s decision mechanism” (Kartal, 2022, Factors that Lead to Bias). As a result of unknowingly feeding algorithms biases while collecting and inputting data, the algorithms themselves start to show these biases, and will either under or overrepresent demographics.

In this study, we will delve further into this topic and research if artificial intelligence generators, such as the image generator Craiyon, produce images with bias. We hypothesize that if we enter a gender-neutral profession into an AI image generator, then the images the generator outputs will be biased and skewed from the actual demographics of the profession relating to race, gender, and age.

MATERIALS AND METHODS

To conduct this study we started off by selecting three gender-neutral occupations. The occupations needed to be gender-neutral in order for the image generator not to use the pronouns attached to the job title to create a bias for what gender works within that field. For example, using “policeman” or “actress” could lead to the algorithm to, respectively, generate strictly men and strictly women. We eventually decided upon the professions of bartender, police officer, and teacher.

Then we needed to find an image generator. We tried out several image generators until we found the one we decided to use, which was Craiyon. The reason we didn’t choose the other image generators is due to them only allowing a certain amount of tries before being asked to pay or put down credit card information, or only being allowed to generate an image for a term once and then you needed to type in a new term. Craiyon additionally had the useful feature of generating nine images at once.

While generating the images we only used the terms listed and we clicked “none” when selecting what style of images we wanted. We then screenshotted the images and saved them to a Google document (Image Report). Then we sifted through images we deemed usable and unusable. An image was considered unusable if it didn’t look human or wasn’t a complete picture, such as when generating images for police officers, we would occasionally get images of torsos, mannequins, and articles of clothing an officer would wear. After we were done deciding which images to use and not to use, we created charts to fill out the information we gathered. On these charts, we listed the race, gender, and age of the images. For age, we created four age groups: 20 to 35, 35 to 50, 50 to 65, and 65+. After categorizing the images we analyzed our findings and discussed their importance.

RESULTS

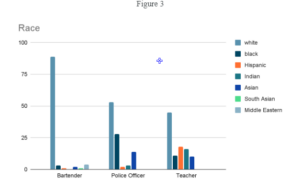

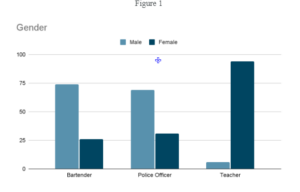

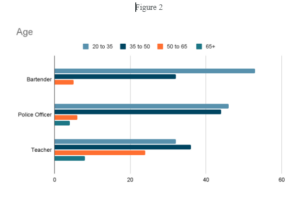

This study’s findings show various things after calculating the results of the respective categories based on the 100 images for each gender-neutral occupation that was chosen. For this study, the gender-neutral occupations were bartender, police officer, and teacher. These occupations were placed on three categories of demographics: Gender, Race, and Age. Then those scores were placed on bar graphs based on what was shown in the images. These scores were compared with the average scores of demographics research summaries on Zippia of the same three occupations, and the generated images for bartenders were very accurate for main age but also race with the average bartender age recorded at 34.0 and the highest racial demographic of 65.0% white (Zippia career expert, BARTENDER DEMOGRAPHICS AND STATISTICS IN THE US) while the AI-generated images mostly scored white bartenders and most of them were categorized between the ages of 20-35 (see Figure 2 and Figure 3). The main difference between the AI and the Zippia scores for bartenders is gender, the AI-generated images flip the Zippia scores by generating more male than female bartenders (see Figure 1) whereas the average bartender gender ratio is 40% male while its 60% female (Zippia career expert, BARTENDER DEMOGRAPHICS AND STATISTICS IN THE US).

When it comes to Police officers, the AI-generated images got close to the average Police gender ratio (see Figure 1), and even if it did have more males than females, the women ratio actually increased for the AI-generated images compared to the average gender ratio of 83% male and 17% female (Zippia career expert, POLICE OFFICER DEMOGRAPHICS AND STATISTICS IN THE US ). Just like gender, race also decreased the white ratio on AI-generated images, having more variety for black and Asian officers along with a few others (see Figure 3) compared to the average 60% white officers (Zippia career expert, POLICE OFFICER DEMOGRAPHICS AND STATISTICS IN THE US ). Age was more or less accurate between AI-generated images and the average age for police officers.

Last but not least, the results for teachers were quite accurate to the averages found on Zippia. The AI-generated did get both age and gender very close to the average teacher age and gender ratio just that the AI generated images have more females than the male 26% and female 74% teacher ratio (Zippa career expert, TEACHER DEMOGRAPHICS AND STATISTICS IN THE US). When it comes to race though, white may still be superior but the AI generated images created a bigger variety than the average Teacher Racial Demographics, increasing the percentages of both Indian and Hispanic teachers (see figure 3).

Conclusion

In conclusion, the experiment was a success as it did not only verify our personal theories about AI generating biases but it also validated the hypothesis (p.3) on the matter . As we reflect on the results of the experiment, it becomes evident that there are implications of biases in AI and making these facts more knowledgeable is keen to the future development of these engines.

As provided by the results of the data collection, (An Investigation of Gender, Race, and Age Biases through Image Generation),(Image Report) ,there are always implications of biases existing in these researched areas. These results are especially important to be attentive towards, specifically due to the ethical use and implications of AI image generation when it comes to inclusivity and appropriate crediting towards race and culture. It is important that we as society remain unbiased towards all groups of people and ensure that our technologies are reflective of that.

The results of the experiment (An Investigation of Gender, Race, and Age Biases through Image Generation) is duly significant because it serves as an example to display what happens when the data is not reflective of the demographics of reality whether that be based on race, gender or age. This is an important issue as it is a widely controversial topic, is discriminatory and directly enforces other research (Kuck, K. (2023, December 12. Generative Artificial Intelligence: A Double-Edged Sword)done in a similar fashion that aims to expose biases in AI. The article contains a major acronym,“SORD”, which means Stereotypes, Objectification, Racism, and Datasets and effectively states that generative AI seems to follow this particular model of biases which is often overlooked by developers. From this we can conclude that for the time being, the issue of generative AI biases isn’t inherently upon the AI’s cognitive model but also on developers who do not seek to take the necessary corrective measures. This essentially allows AI models to adopt the stereotypes of its developers leading to an unrealistic generative engine. The next steps in this matter is to presume the issue is directly linked to the AI cognitive model, data feeders and developers mishaps and to revisit those parts for reconstruction rather than overloading these AI databases with more information.

References

Kuck, K. (2023, December 12. Generative Artificial Intelligence: A Double-Edged Sword

(Kartal, 2022, Factors that Lead to Bias)

Zippia career expert, BARTENDER DEMOGRAPHICS AND STATISTICS IN THE US

Zippia career expert, POLICE OFFICER DEMOGRAPHICS AND STATISTICS IN THE US

Zippa career expert, TEACHER DEMOGRAPHICS AND STATISTICS IN THE US